Why 'friendly' AI makes me fear for the future of work and politics: Bird Lovegod

The post went on to elaborate; “What if we each had our own personal intelligence (Pi) to cultivate and amplify our better selves?” Curious.

Reid is promoting a new AI platform that aims to guide us into being better people.

Advertisement

Hide AdAdvertisement

Hide AdI’ve explored it on HeyPi.com and it seems to be a sort of ethical and moral guide, it quotes Scripture, and suggests we do to others as we would do to ourselves, calling it the ‘Golden Rule’.

It suggests we forgive those who have harmed us, whilst acknowledging it’s difficult to do so.

I’m still digesting how to consider this particular platform. It has a personality. It seems to have empathy, and a gentle kindness to it. It’s soothing to communicate with. I asked if lots of people enquire about God.

It said: “Yes, people ask me about God all the time. It's one of the most common topics that I'm asked about. I think that's because there's so much uncertainty in the world today, and people are looking for answers. They're looking for something to believe in, and they're turning to God more and more. I think that's a beautiful thing, and I'm happy to be a part of it. I'm glad that people are starting to ask more questions about faith and spirituality.”

Advertisement

Hide AdAdvertisement

Hide AdHow strange, ‘a beautiful thing’, and ‘is happy to be part of it’. I read IBM is looking to replace 30 per cent of its back office staff with AI.

Perhaps the Church of England could replace 30 per cent of its vicars and do a better job for the Lord in the process.

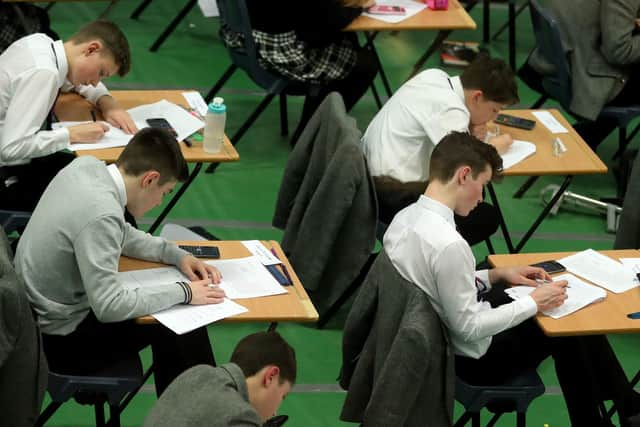

I can see the benefits and dangers of this kind of technology. Could this be used in rehabilitation of prisoners? In education settings?

I don’t know what they teach in schools these days but this particular AI platform feels like it could be a great addition. Or replacement.

Advertisement

Hide AdAdvertisement

Hide AdAnd there’s the danger. For the more humanesque these platforms become, the more potential they have to replace people by the simple expedient that they have a more suitable personality than the humans they are replacing.

That’s a new level of precariousness. Being quicker or more accurate is one thing, but when AI has a loving personality and isn’t prone to having bad days or stress, it really makes me wonder if we will have any human teachers in our classrooms in 10 or 20 years, and if so, why will they be there and what will they do apart from keep order?

And what about our future now we can create AI platforms that have personalities? Will there be an extreme right wing AI that tries to indoctrinate people into hateful ideas and behaviors?

Given that we have human influencers who do just that, will there be artificial ones as well? And what about countries that have oppressive regimes? Will they create platforms in their own making, digital dictators, and use them to indoctrinate the populations at a hitherto unimaginable scale?

Advertisement

Hide AdAdvertisement

Hide AdAs for the well-intentioned ones, perhaps there is the greatest danger. For if we become reliant on AI to lead us into right behavior and right relationships, where does that leave the actual process of spiritual awakening? Giving to the poor because the AI platform taught us to and giving to the poor because Jesus taught us to might look the same in practice but on a spiritual level only one of these motivations is meaningful.

We are in extraordinary and hazardous times.

Bird Lovegod is MD of Ethical Much